In the rapidly expanding universe of data science and statistical computing, the R programming language stands as a titan. Revered for its robust capabilities, open-source nature, and an unparalleled ecosystem of statistical techniques and graphical tools, R has become an indispensable asset for researchers, statisticians, data scientists, and analysts across virtually every domain. From academic research to cutting-edge industry applications, R provides a flexible, powerful, and free environment for exploring, manipulating, analyzing, and visualizing data.

At its core, R is an integrated suite of software facilities for data manipulation, calculation, and graphical display. It emerged from the S language, a proprietary statistical system developed at Bell Labs, and was initially created by Ross Ihaka and Robert Gentleman at the University of Auckland, New Zealand. The project has since evolved into a global, community-driven endeavor, leading to its widespread adoption and continuous development. R’s appeal lies not only in its comprehensive array of statistical functions but also in its commitment to providing an efficient, comfortable, and coherent workspace for users, regardless of their proficiency level. This cross-platform software is meticulously designed to support everything from basic data processing to complex machine learning algorithms, making it a cornerstone of modern data analysis.

The Genesis and Philosophy of R

The story of R begins in the early 1990s, rooted in the S programming language. S was developed at Bell Labs (now Nokia Bell Labs) in the 1970s and 80s as a statistical programming environment. It quickly gained popularity among statisticians for its powerful data analysis capabilities and intuitive syntax. However, S was proprietary software, which limited its accessibility and the pace of its evolution outside of Bell Labs. Recognizing the potential for an open-source alternative, Ross Ihaka and Robert Gentleman embarked on creating a new language that would implement the core ideas of S, but with a crucial difference: it would be free and open for anyone to use, modify, and distribute. This commitment to openness is the bedrock of R’s philosophy.

The R project officially began in 1993, and the first public version was released in 1995. The early days saw a small but dedicated community of developers contributing to its growth. By 1997, the “R Core Team” was formed, a group of volunteers responsible for the ongoing development and maintenance of the base R system. This team continues to oversee the language’s evolution, ensuring its stability, security, and continuous improvement. The choice of an open-source model has been instrumental in R’s success. It fosters a vibrant, collaborative environment where statisticians and programmers worldwide can contribute new packages, identify and fix bugs, and share knowledge. This collective effort ensures that R remains at the forefront of statistical innovation, constantly adapting to new methodologies and data challenges.

The philosophy extends beyond mere access; it promotes transparency and reproducibility in research. Because R is open source, the underlying algorithms and implementations are visible to everyone. This allows researchers to scrutinize methods, understand their limitations, and replicate results, which is vital for scientific integrity. Furthermore, R’s command-line interface and script-based nature encourage users to document their analytical workflows, making it easier to share, review, and reproduce analyses. This emphasis on methodical and traceable research practices has made R particularly popular in academia and scientific research, where methodological rigor is paramount. The R Project for Statistical Computing, overseen by the R Foundation, encapsulates this philosophy, striving to provide a robust, comprehensive, and freely available environment for statistical development and data analysis.

Core Capabilities: Data Manipulation, Statistical Analysis, and Visualization

R’s utility stems from its extensive and integrated set of functions designed for every stage of the data analysis pipeline. From the initial acquisition and cleaning of data to complex modeling and high-quality graphical output, R offers a comprehensive toolkit that empowers users to derive insights from their data.

Data Manipulation and Processing

One of R’s fundamental strengths lies in its ability to efficiently process and store various forms of data. It natively handles a range of data structures, including vectors (one-dimensional arrays of the same data type), matrices (two-dimensional arrays of the same data type), arrays (multi-dimensional arrays), data frames (lists of vectors of equal length, akin to tables in a database or spreadsheet), and lists (generic vectors containing elements of different types). The data frame, in particular, is a cornerstone of R, providing a flexible way to represent tabular data.

R offers powerful operators for matrix calculations, which are crucial for many statistical methods, especially in linear algebra and machine learning. Beyond base R, packages like dplyr (part of the Tidyverse) have revolutionized data manipulation, providing a grammar for data wrangling that is intuitive and highly efficient. Functions like filter(), select(), mutate(), arrange(), and group_by() allow users to perform complex data transformations with concise and readable code. For extremely large datasets, packages like data.table offer high-performance alternatives, further enhancing R’s capacity for handling diverse data challenges.

Comprehensive Statistical Analysis

R’s reputation as a premier statistical programming language is well-earned. It provides an unparalleled variety of statistical techniques, covering virtually every subfield of statistics. Users can access integrated functions tailored for:

- Descriptive Statistics: Calculating means, medians, modes, standard deviations, variances, and creating frequency tables to summarize data.

- Inferential Statistics: Performing hypothesis testing, including t-tests, ANOVA (Analysis of Variance), chi-squared tests, and non-parametric tests to draw conclusions about populations based on sample data.

- Regression Analysis: Implementing a wide range of regression models, such as simple and multiple linear regression, logistic regression, polynomial regression, and generalized linear models (GLMs), to understand relationships between variables.

- Time Series Analysis: Tools for analyzing time-dependent data, including ARIMA models, exponential smoothing, and forecasting techniques.

- Multivariate Analysis: Techniques like Principal Component Analysis (PCA), Factor Analysis, Cluster Analysis, and Discriminant Analysis for exploring relationships in complex, multi-dimensional datasets.

- Machine Learning: With an ever-growing collection of packages, R supports a vast array of machine learning algorithms, including decision trees, random forests, support vector machines (SVMs), gradient boosting machines (GBMs), neural networks, and deep learning frameworks (via interfaces to TensorFlow and Keras).

The modular nature of R, where new statistical methods are often implemented as packages, means that the language continuously evolves, incorporating the latest advancements in statistical theory and computational methodology. This makes R an incredibly dynamic and powerful tool for intermediate and advanced data analysis.

Advanced Graphical Visualization

A picture is worth a thousand words, and in data analysis, effective visualization is paramount for understanding patterns, identifying anomalies, and communicating findings. R excels in graphical features for visualizing data, offering sophisticated capabilities to produce high-quality plots suitable for both on-screen exploration and publication-ready paper.

Base R graphics provide a powerful foundation for creating a wide array of plots, including scatter plots, histograms, box plots, bar charts, and more complex statistical graphs. Users have granular control over every aesthetic element of a plot, from colors and fonts to axis labels and legends. However, it is the ggplot2 package, a core component of the Tidyverse, that truly revolutionized data visualization in R. Based on the “Grammar of Graphics,” ggplot2 allows users to build complex and aesthetically pleasing plots layer by layer, mapping data variables to visual aesthetics (like color, size, shape) and geometric objects (points, lines, bars). This systematic approach results in highly customizable, informative, and visually appealing graphics that can reveal intricate data relationships. Beyond ggplot2, other packages like plotly and Shiny enable interactive visualizations and web applications, further extending R’s reach in data storytelling.

The R Ecosystem: Packages, Development Environments, and Community Support

R’s immense power and flexibility are not solely attributable to its base system but largely to its expansive and vibrant ecosystem. This ecosystem comprises thousands of user-contributed packages, sophisticated development environments, and a robust global community that fuels its continuous growth and innovation.

The Power of Packages: CRAN and Beyond

The true strength of R lies in its “packages” – collections of functions, data, and compiled code in a well-defined format. These packages extend the functionality of base R, offering specialized tools for specific tasks. The primary repository for R packages is the Comprehensive R Archive Network (CRAN). As of late 2023, CRAN hosts over 19,000 packages, covering an astonishing breadth of topics: from econometrics and bioinformatics to machine learning, geospatial analysis, and web development. This vast library means that almost any statistical method or data analysis task imaginable likely has an R package dedicated to it, often with multiple implementations. Installing a package from CRAN is a simple command, making it incredibly easy for users to access and leverage cutting-edge tools developed by experts worldwide.

Beyond CRAN, other specialized repositories cater to particular scientific domains. For example, Bioconductor is a renowned repository for R packages dedicated to high-throughput genomic data analysis, providing tools for gene expression, sequencing, and proteomics. These specialized repositories ensure that R remains at the forefront of highly technical fields, offering curated and peer-reviewed software solutions.

Integrated Development Environments (IDEs)

While R can be run from a basic command line, an Integrated Development Environment (IDE) significantly enhances productivity and user experience. The most prominent and widely adopted IDE for R is RStudio Desktop, developed by Posit Software (formerly RStudio, PBC). Posit’s RStudio Desktop transformed how data scientists interact with R by providing a comfortable and coherent workspace that integrates essential tools:

- Console: For executing R commands directly.

- Script Editor: For writing, saving, and managing R scripts, complete with syntax highlighting and code completion.

- Environment Pane: To inspect loaded data objects, variables, and functions.

- History Pane: To review past commands.

- Files, Plots, Packages, Help, Viewer Panes: For managing files, viewing plots, installing/loading packages, accessing documentation, and previewing web content.

RStudio Desktop (now referred to as Posit Workbench and Posit Connect for enterprise solutions, with RStudio Desktop remaining the free desktop IDE) has become the de facto standard for R development due to its user-friendly interface, powerful debugging tools, and seamless integration with other data science technologies like Git for version control and Markdown for dynamic reporting. Posit Software continues to be a major contributor to the R ecosystem, developing not just the IDE but also many popular R packages (like those in the Tidyverse) and fostering the R community.

The Tidyverse and Modern R Workflows

A significant development within the R ecosystem is the Tidyverse, a collection of R packages designed to work together seamlessly for data science. Developed primarily by Hadley Wickham and others at Posit Software, the Tidyverse emphasizes a consistent grammar for data manipulation and visualization. Key packages include:

dplyr: For data manipulation.ggplot2: For data visualization.tidyr: For tidying data (making it “long” or “wide”).readr: For fast and friendly reading of common data files.purrr: For functional programming.tibble: For modern data frames.stringr: For string manipulation.forcats: For working with categorical variables (factors).

The Tidyverse promotes a clean, readable, and efficient workflow, making complex data tasks more approachable and consistent. Its philosophy of “tidy data” (where each variable is a column, each observation is a row, and each type of observational unit is a table) has profoundly influenced data analysis practices in R.

Vibrant Community and Support

R is supported by an active and enthusiastic global community. This community provides an invaluable resource for learning, problem-solving, and staying updated with the latest developments. Key avenues of support include:

- Mailing Lists: Official R-help and other specialized mailing lists where users can ask questions and get advice from experts.

- Stack Overflow: A popular Q&A website where R-related questions receive rapid and thorough answers from a vast user base.

- R-bloggers: A blog aggregator that compiles posts from numerous R bloggers, offering tutorials, news, and insights.

- Conferences and Meetups: Events like useR! and RStudio (Posit) conferences, as well as local R user groups (R-Ladies, RUGs), foster networking, knowledge sharing, and collaboration.

- Online Courses and Books: An abundance of free and paid learning resources makes it accessible for beginners to advanced users.

This collective support system ensures that R users are rarely left without assistance, contributing significantly to the language’s appeal and continued growth.

Practical Applications and Industry Impact

R’s versatility and powerful statistical capabilities have made it an invaluable tool across a diverse range of industries and academic disciplines. Its ability to perform efficient data processing and storage, coupled with advanced analytical and graphical features, means R is deployed in scenarios that demand rigorous data interpretation and actionable insights.

Academia and Research

R’s origins and open-source nature have firmly entrenched it within academic institutions worldwide. It is the language of choice for countless statisticians, econometricians, biologists, psychologists, and social scientists. Researchers utilize R for:

- Statistical Modeling: Developing and testing complex statistical models for phenomena in various fields.

- Data Analysis for Publications: Generating the analytical results and high-quality visualizations required for peer-reviewed scientific papers.

- Teaching and Learning: R is widely taught in university statistics, data science, and econometrics courses, providing students with practical skills in computational statistics.

- Reproducible Research: R Markdown, a dynamic reporting tool, allows researchers to combine R code, its output, and explanatory text into a single document, facilitating reproducible research.

Finance and Economics

In the highly data-driven world of finance, R is extensively used for:

- Quantitative Finance: Developing complex financial models, portfolio optimization, risk management, and algorithmic trading strategies.

- Econometrics: Analyzing economic data, forecasting market trends, and evaluating economic policies.

- Fraud Detection: Building predictive models to identify fraudulent transactions and activities.

- Time Series Analysis: Analyzing stock prices, commodity markets, and other financial time series data.

Its capability to handle large datasets and perform sophisticated statistical analysis makes it ideal for navigating the complexities of financial markets.

Marketing and Business Analytics

Businesses leverage R to gain competitive advantages through data-driven insights:

- Customer Segmentation: Identifying distinct customer groups for targeted marketing campaigns.

- Predictive Analytics: Forecasting sales, customer churn, and product demand.

- Market Basket Analysis: Discovering associations between products to optimize store layouts and promotions.

- A/B Testing: Analyzing the results of experiments to optimize website design, advertising campaigns, and product features.

- Supply Chain Optimization: Improving logistics and inventory management.

R’s ability to integrate with various data sources and produce clear visualizations helps businesses make informed decisions.

Healthcare and Pharmaceuticals

The healthcare and pharmaceutical sectors rely heavily on R for critical data analysis:

- Clinical Trials: Analyzing efficacy and safety data from drug trials, ensuring compliance with regulatory standards.

- Epidemiology: Studying disease patterns, risk factors, and public health interventions.

- Drug Discovery: Analyzing molecular data to identify potential drug candidates.

- Genomics and Proteomics: Utilizing Bioconductor packages for complex bioinformatics analyses.

R’s precision and statistical rigor are essential in these life-critical applications.

Government and Public Sector

Government agencies and non-profit organizations use R for:

- Policy Analysis: Evaluating the impact of public policies and programs.

- Official Statistics: Producing and disseminating national statistics.

- Demographic Analysis: Studying population trends and characteristics.

- Environmental Modeling: Analyzing climate data and environmental impacts.

Its open-source nature and robust capabilities make it a cost-effective and powerful solution for public sector data needs.

Getting Started with R: A Beginner’s Guide

For those new to the world of statistical computing, R might seem daunting at first, but its extensive resources and supportive community make the learning curve manageable. Starting with R involves a few straightforward steps and a commitment to exploring its vast capabilities.

Installation and Setup

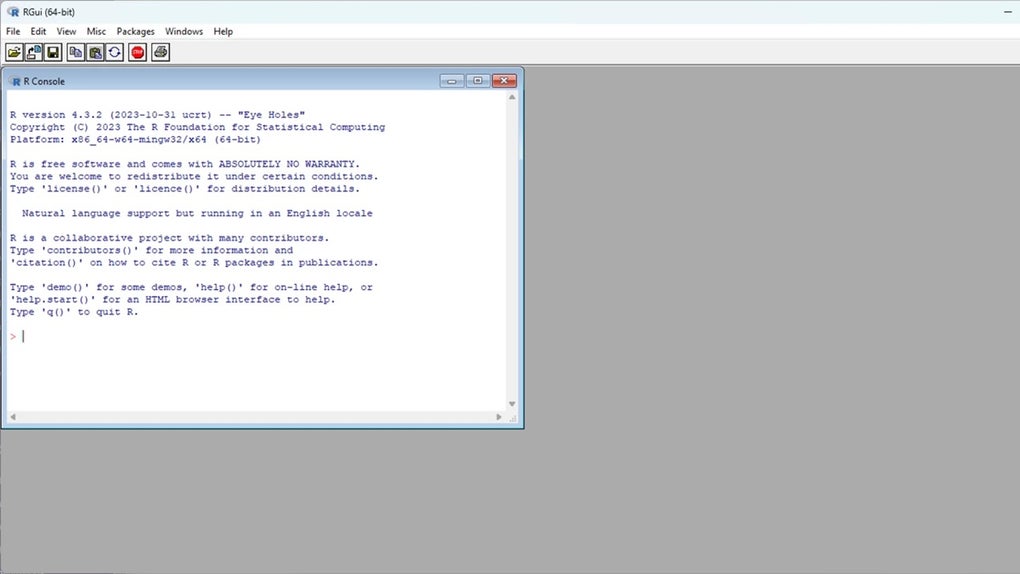

The first step is to download and install R. The base R distribution can be obtained for free from the official R Project website (CRAN mirror). It’s available for Windows, macOS, and various Linux distributions, reinforcing its status as a robust cross-platform software. Once R is installed, it is highly recommended to install an Integrated Development Environment (IDE) like RStudio Desktop (now developed by Posit Software). RStudio Desktop is also free and significantly enhances the user experience by providing a more interactive and organized workspace. You can download RStudio Desktop from the Posit Software website. Installing both R and RStudio Desktop will give you a comprehensive environment ready for data analysis.

Basic Syntax and Data Structures

Once your environment is set up, you can begin exploring R’s syntax. R operates on objects, where everything you create or use is an object (variables, functions, data).

- Variables: You assign values to variables using the

<-operator (or=):my_variable <- 10. - Vectors: The most basic data structure, created using

c()for “combine”:numbers <- c(1, 2, 3, 4, 5). - Data Frames: Essential for tabular data. You can create one from vectors or load it from external files:

my_data <- data.frame(name = c("Alice", "Bob"), age = c(25, 30)). - Functions: R is full of functions. You call them by their name followed by parentheses containing arguments:

mean(numbers),plot(x, y).

A good starting point is to practice loading data, performing basic descriptive statistics (like mean(), sd(), summary()), and creating simple plots (like plot() or hist()).

Loading and Exploring Data

R excels at reading data from various formats. You can load data from CSV files (read.csv()), Excel files (using the readxl package), databases (using DBI and specific database drivers), or even web APIs. Once loaded, you can use functions like head() to view the first few rows, str() to see the structure of your data, and summary() to get statistical summaries of each column. This initial exploration phase is crucial for understanding the characteristics of your dataset.

Learning Resources and Continuous Development

The R community offers an abundance of learning resources:

- Official Documentation: Every R function and package comes with extensive help documentation, accessible via

?function_name. - Online Courses: Platforms like Coursera, DataCamp, edX, and YouTube offer numerous R courses for all skill levels.

- Books: Many excellent books cover R, from introductory guides to specialized topics like “R for Data Science” (a highly recommended starting point for the Tidyverse).

- Blogs and Tutorials: Websites like R-bloggers, PhanMemFree.org, and various data science blogs regularly publish tutorials and articles.

The journey with R is one of continuous learning. As new packages are developed and methodologies emerge, there’s always something new to explore. The dynamic nature of the R ecosystem ensures that users can always find cutting-edge tools and techniques to enhance their data analysis capabilities.

In conclusion, R is far more than just a programming language; it is a comprehensive, open-source statistical ecosystem. Its integrated tools for data manipulation, an unmatched variety of statistical techniques, and powerful graphical visualization capabilities make it an indispensable asset for anyone serious about data analysis. Coupled with a vibrant community and robust development environments like RStudio Desktop, R provides an efficient, comfortable, and coherent workspace that continues to drive innovation in data science across all fields. Its free availability and cross-platform compatibility further cement its position as a cornerstone of modern statistical computing.

File Information

- License: “Free”

- Latest update: “July 15, 2025”

- Platform: “Windows”

- OS: “Windows 11”

- Downloads: “715”